Right now I am trying to delve deeper in the area of data engineering, to do this I chose the stack comprising of Snowflake, for a cloud datawarehouse storage system, airflow to extract data from one point to another and dbt to transform the data where I want it to be.

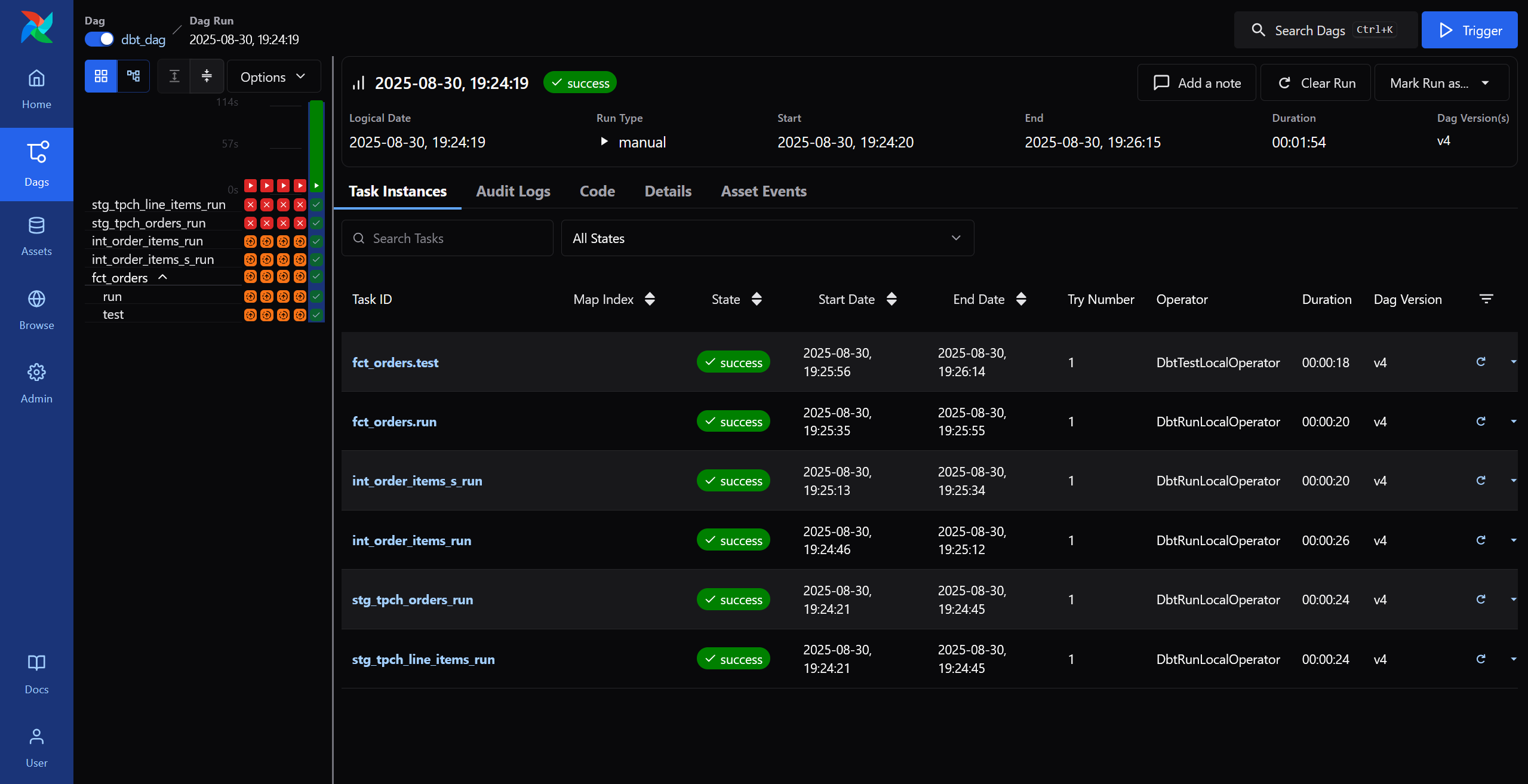

To start of I did a small setup project following the medium guide Building Data Pipelines with Snowflake, Apache Airflow and dbt by Ramiro Meija. In this project I created a small data pipeline extracting, transforming and loading data from the example datawarehouse TPC Benchmark H (TPC-H). The final result can be visualized in an airflow page like this:

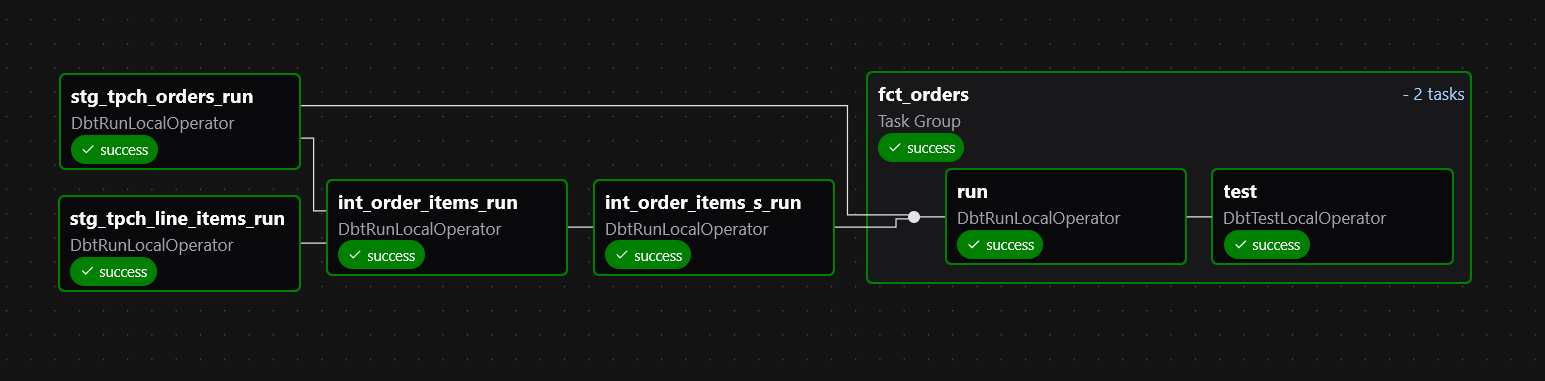

The data pipeline itself can be seen here:

Next I have the goal of creating a more persolined goal of building my own, more complex data pipeline using these very tools, my only limitation in this goal is the duration of the free trial in Snowflake that should end around 20/09, so hopefully I will get it done by then.